Tags

In the world of Kubernetes and container orchestration, ensuring the efficient monitoring, tracing, and logging of applications is pivotal. For AWS users, particularly those leveraging Amazon Elastic Kubernetes Service (EKS) with AWS Fargate, the AWS Distro for OpenTelemetry (ADOT) plays a crucial role in streamlining these processes. This blog post delves into the importance of the ADOT collector in EKS, spotlighting its implementation as a StatefulSet in Fargate-based systems.

Understanding ADOT

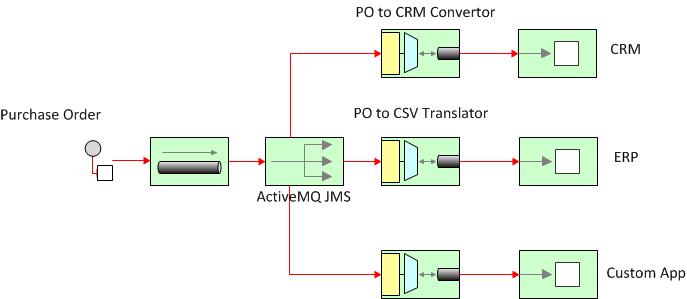

AWS Distro for OpenTelemetry (ADOT) is a secure, production-ready, AWS-supported distribution of the OpenTelemetry project. OpenTelemetry provides open-source APIs, libraries, and agents to collect traces and metrics from your application. You can then send the data to various monitoring tools, including AWS services like Amazon CloudWatch, AWS X-Ray, and third-party tools, for analysis and visualization.

Why ADOT in EKS?

Kubernetes environments, especially those managed through EKS, can become complex, hosting numerous microservices that communicate internally and externally. Tracking every request, error, and transaction across these services without impacting performance is where ADOT shines. It efficiently collects, processes, and exports telemetry data, offering insights into application performance and behavior, thereby enabling developers to maintain high service reliability and performance.

The ADOT Collector as a StatefulSet in AWS Fargate

The deployment of the ADOT collector in EKS can vary based on the underlying infrastructure—Node-based or Fargate. For Fargate-based systems, the configuration manifests a significant divergence, particularly in using StatefulSet over DaemonSet. Here’s why this distinction is crucial.

Fargate: A Serverless Compute Engine

Fargate allows you to run containers without managing servers or clusters. It abstracts the server and cluster management tasks, enabling you to focus on designing and building your applications. This serverless approach, however, means that you don’t have direct control over the nodes running your workloads, differing significantly from a Node-based system where DaemonSet would be ideal for deploying agents like the ADOT collector across all nodes.

Why StatefulSet?

In Fargate, every pod runs on its own isolated environment without sharing the underlying host with other pods. This isolation makes DaemonSet, which is designed to run a copy of a pod on each node in the cluster, incompatible with Fargate’s architecture. Instead, StatefulSet is used to manage the deployment and scaling of a set of Pods and to provide guarantees about the ordering and uniqueness of these Pods. Here’s how the ADOT collector configuration looks when deployed as a StatefulSet:

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: adot-collector

namespace: fargate-container-insights

...

This configuration ensures that the ADOT collector runs reliably within the Fargate infrastructure, adhering to the serverless principles while providing the necessary observability features.

Advantages of Using StatefulSet for ADOT in Fargate

- Isolation and Security: Each instance of the ADOT collector runs in its own isolated environment, enhancing security and reliability.

- Scalability: Easily scale telemetry collection in tandem with your application without worrying about the underlying infrastructure.

- Consistent Configuration:

StatefulSetensures that each collector instance is configured identically, simplifying deployment and management. - Persistent Storage: If needed,

StatefulSetcan leverage persistent storage options, ensuring that data is not lost between pod restarts.

Conclusion

Integrating the ADOT collector in EKS as a StatefulSet for Fargate-based systems harmonizes with the serverless nature of Fargate, offering a scalable, secure, and efficient method for telemetry data collection. This setup not only aligns with the modern cloud-native approach to application development but also enhances the observability and operability of applications deployed on AWS, ensuring that developers and operations teams have the insights needed to maintain high performance and reliability.

By leveraging the ADOT collector in this manner, organizations can harness the full power of AWS Fargate’s serverless compute alongside EKS, driving forward the next generation of cloud-native applications with confidence.